BY TAUHID ZAMAN, OPINION CONTRIBUTOR — 04/22/19 04:15 PM EDT

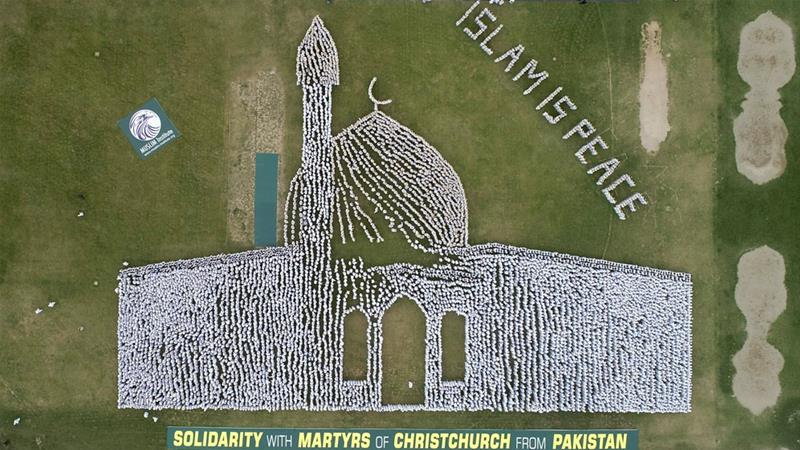

As a Muslim American, I was shocked by the Christchurch mosque shootings in New Zealand that took the lives of 50 completely innocent people and injured scores of others in March. The tragedy was made more sickening by the fact that the alleged gunman, reportedly a white supremacist, live-streamed the first attack on Facebook Live.

The fact that the attack happened during the Muslim Friday prayer, a religious service I attend regularly myself, left me deeply shaken and heartbroken. Besides privately grieving for those who perished in Christchurch, I also attended public rallies to show solidarity with the victims in the aftermath of the carnage in New Zealand.

You may be interested

But there’s something you won’t see me doing: Calling for a crackdown on what some deem offensive speech on social media — and a crackdown on what some consider extremist right-wing speech on social media in particular, as many have called for in the wake of the tragic Christchurch mosque shootings.

I know this may surprise some people. As I said, as a Muslim, I was particularly outraged by the slaughter in New Zealand. But I’m not outraged to the extent that I believe that:

- Censorship of speech, whether by governments or near-monopolistic social media corporations, is an appropriate response to extremism; and

- censorship of speech is an effective way to combat extremism.

To be clear: I applaud the swift action of Facebook, Twitter, YouTube and other social-media sites to take down videos of the Christchurch shootings. Indeed, Facebook reported it removed or blocked 1.5 million videos in the first 24 hours after the attacks.

To me, these videos were nothing more than sick snuff films; pornographic videos of actual murders of innocent human beings. There’s simply no place for such vile videos on social media.

I also believe there’s no place, anywhere and at any time, for people to threaten and openly call for violence against others on social media. There are common-sense limits to free speech — and that limit is reached when it comes to encouraging and advocating violence.

What I oppose is the censorship of controversial speech, versus dangerous speech, and I believe we unfortunately crossed that line on social media even before the New Zealand mosque shootings.

Most of us have now heard of social-media firms cracking down on, say, right-wing conspiracy theorist Alex Jones and the blocking of sites and posts by other conservatives.

Some may dismiss the complaints of conservatives that they are being unfairly targeted by social-media giants, but to do so ignores a potentially serious problem.

Conservative sites and posts that are not espousing violence are getting shut down or removed by social-media giants because their views are deemed offensive, not necessarily dangerous. And all this is happening in a somewhat arbitrary and potentially biased manner.

After the Christchurch tragedies, one of my concerns was that the justified removal of the mosque-shooting videos from social-media sites would lead to further censorship policies — and, sure enough, that’s what happened.

Late last month, Facebook announced that it intends to ban content that glorifies white nationalism and separatism, a major policy shift that’s slated to begin early this month.

But do we really want to go down this road? Do we really want huge social-media sites defining what is and what isn’t white nationalism and separatism — what is and what isn’t glorification of such causes? More importantly, do we really want social-media companies defining what’s offensive and controversial speech in general?

I’ve dedicated a large amount of my academic time studying true online extremism on social media. And by true extremism, I’m talking about truly dangerous views espoused by groups like ISIS that glorify death and terrorism and that explicitly encourage violence on a mass scale against others.

These are the truly dangerous groups and sites we need to keep our eye on moving forward, and it’s not easy keeping track of them on social media and in other dark corners of the internet.

The bottom line is that we have to get smarter and more realistic when it comes to dealing with extremist views on social media.

While it is important to prevent the spread of content that directly endangers people’s lives, we need to be careful and differentiate between those expressing clearly violent views from those simply expressing controversial views.

Social media companies should take action to protect us from direct harm, not become arbiters of what is and isn’t offensive. When it comes to free speech versus online extremism, we shouldn’t throw out the baby with the bath water.

Tauhid Zaman is associate professor of operations management at the MIT Sloan School of Management. His research focuses on solving operational problems involving social network data. Some of the topics he studies include predicting the popularity of content, finding online extremists and geo-locating users.