SAN FRANCISCO – Facebook is banning explicit praise, support or representation of white nationalism and white separatism on Facebook and Instagram, including phrases such as “I am a proud white nationalist,” following deadly attacks at two New Zealand mosques and a backlash from black history scholars and civil rights groups.

“Over the past three months, our conversations with members of civil society and academics who are experts in race relations around the world have confirmed that white nationalism and separatism cannot be meaningfully separated from white supremacy and organized hate groups,” Facebook said in a blog post Wednesday.

Users searching for white nationalism and separatism will be directed to resources that help people leave hate groups starting next week, the company said.

You may be interested

Explicit expressions of support for white supremacy are not permitted on Facebook. The decision to extend that ban to white nationalism and separatism addresses one of Facebook’s most controversial content moderation policies that control the speech of more than 2 billion users around the globe.

The social media company had previously defended the practice, saying it consulted researchers and academic experts in crafting a policy drawing a line between white supremacy and the belief that races should be separated. In training documents obtained by Vice’s Motherboard last year, Facebook said white nationalism “doesn’t seem to be always associated with racism (at least not explicitly).”

That position provoked a strong reaction from civil rights groups.

“By attempting to distinguish white supremacy from white nationalism and white separatism, Facebook ignores centuries of history, legal precedent, and expert scholarship that all establish that white nationalism and white separatism are white supremacy,” the Lawyers’ Committee for Civil Rights Under Law wrote Facebook in September.

Groups protest in Freedom Plaza with the U.S. Capitol in the background, on the one year anniversary of Charlottesville’s Unite the Right rally, Sunday, Aug. 12, 2018, in Washington. (Photo: Alex Brandon, AP)

Facebook’s policy reversal marks a major step toward reckoning with the vast amount of white nationalist content that continues to fester on social media services.

With a growing number of populist movements gaining hold around the globe, technology companies have been reluctant to ban white nationalist content, wary of charges of censorship.

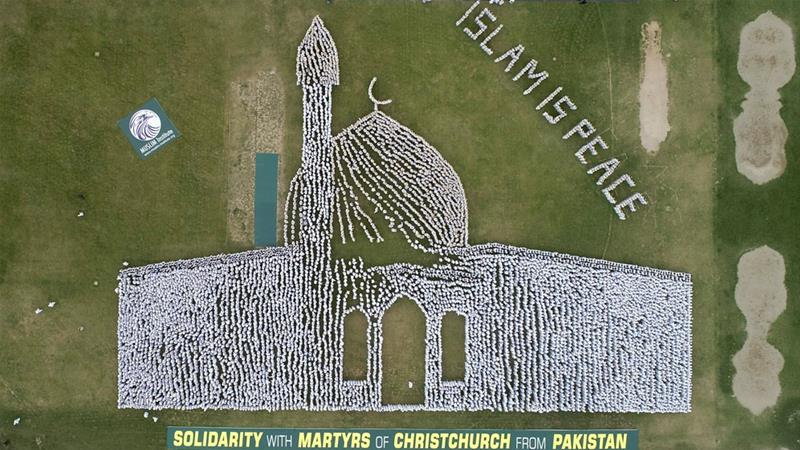

White nationalism hurtled back into the spotlight after a gunman opened fire at two mosques in Christchurch, New Zealand, killing 50 people. In a 74-page manifesto, he described himself as an “ordinary white man” whose goal was to “crush immigration and deport those invaders already living on our soil” and “ensure the existence of our people, and a future for white children.” He livestreamed the attack on Facebook.

“This is something that has been in the works for some time, but following the horrific attacks in New Zealand is more important than ever,” Facebook said in a statement.

Implicit or coded expressions of white nationalism and white separatism will not be banned right away as those are harder to detect, Facebook told Motherboard.

Rashad Robinson, president of the civil rights group Color of Change, called on other tech companies “to act urgently to stem the growth of white nationalist ideologies, which find space on platforms to spread the violent ideas and rhetoric that inspired the tragic attacks witnessed in Charlottesville, Pittsburgh and now Christchurch.”

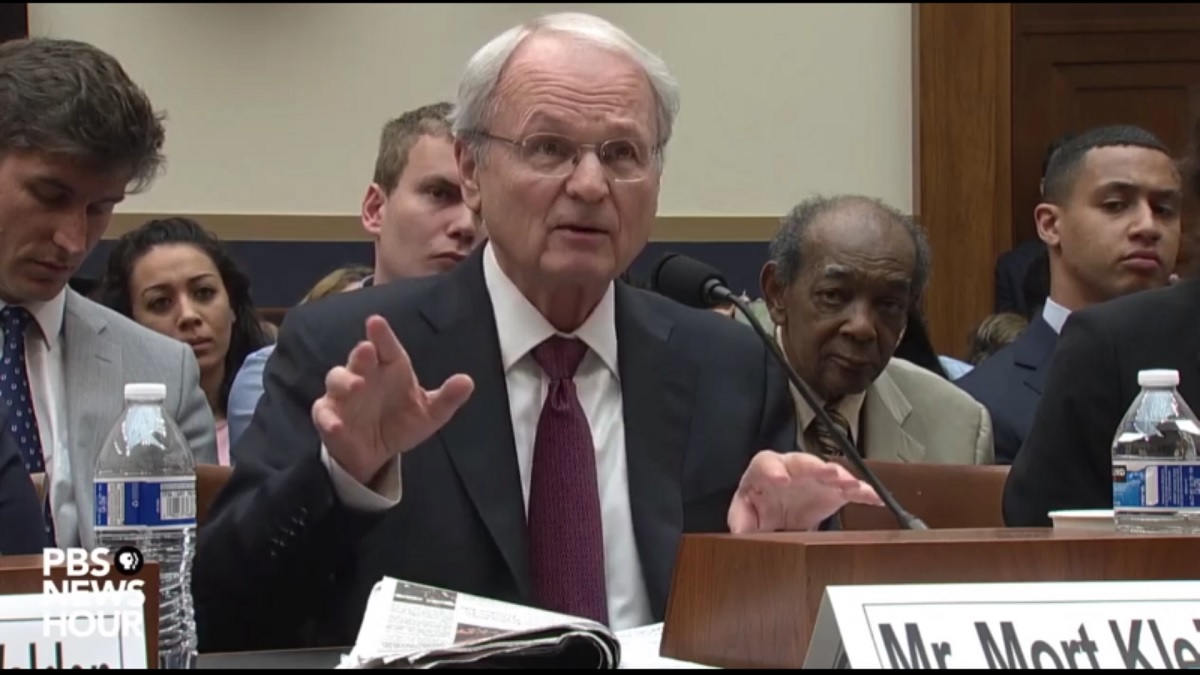

The House Judiciary Committee plans to hold a hearing in early April on the rise of white nationalism. Researchers say that rise in attacks by white supremacists and anti-government extremists is being fueled by growing political polarization, anti-immigrant sentiment and the ease with which proponents can spread their beliefs over the internet.

A 2016 study from George Washington University’s Program on Extremism in D.C. found that white nationalists had seen their followers grow by more than 600 percent since 2012, outperforming the Islamic State in nearly every metric, from follower counts to tweets per day.

More: New Zealand mosque shootings: Are social media companies unwitting accomplices?

More: California mosque arson suspect left graffiti about New Zealand attack, police say

More: New Zealand debates free speech after ban of accused mosque shooter’s manifesto

In a tense political climate, hate speech – how to define it and how to root it out – has become a priority for Facebook, Twitter and Google’s YouTube. Facebook has taken steps to curb hate speech on its platforms using a combination of computer algorithms and thousands of moderators trained to scrub posts that violate the company’s rules.

After a 2017 white supremacist rally turned deadly in Charlottesville, Virginia, Facebook wrestled with how to police white supremacy on its platform, according to the documents leaked to Motherboard. Facebook stopped short of a policy prohibiting white nationalist or separatist content after expressing concern that such a ban would extend to black separatist groups, the Zionist movement and the Basque separatist movement.

After an outcry from civil rights groups, Facebook told Motherboard in September it was reviewing its policy on white nationalism and separatism.

Facebook said Wednesday it had considered “broader concepts of nationalism and separatism – things like American pride and Basque separatism, which are an important part of people’s identity.”

Content relating to separatist and nationalist movements such as the Basque separatist movement in France and Spain will still be allowed on Facebook.

“Going forward, while people will still be able to demonstrate pride in their ethnic heritage, we will not tolerate praise or support for white nationalism and separatism,” the company said.

Kristen Clarke, president and executive director of the Lawyers’ Committee for Civil Rights Under Law, said Facebook’s new policy is a “step forward in the fight against white supremacist movements,” But, she said, much work remains to be done.

“Putting in place the correct policy is a start, but Facebook also needs to enforce those policies consistently, provide meaningful transparency around any AI techniques used to address this problem, and adequately retrain its personnel. Without proper implementation, policies will prove to be just empty words, and white supremacy will continue to proliferate across its platform,” she said.